Set up windows server failover clusters

As a precursor for HA in SQL Server Standard Edition

Index

Contents

Set up windows server failover clusters 0

List of acronyms used in this document: 2

A brief explanation of what WSFC is and why we use it: 2

Prerequisites for setting up WSFC 3

Additionally, verify the following account requirements: 3

Now we need to do the actual Clustering work 4

Step 1: Install Failover Cluster feature on all servers that will form part of the cluster 4

Step 2: Validate the configuration 5

Step 3: Run cluster validation tests 6

Step 4: Create the failover cluster 9

One last thing – Setting up Cluster-Aware Updating 18

Configure the nodes for remote management 21

Enable a firewall rule to allow automatic restarts 22

Enable Windows Management Instrumentation (WMI) 23

Enable Windows PowerShell and Windows PowerShell remoting 23

Install .NET Framework 4.6 or 4.5 23

Best practices recommendations for using Cluster-Aware Updating 24

Recommendations for applying Microsoft updates 24

Introduction

List of acronyms used in this document:

Acronym | Description |

WSFC | Windows Server Failover Cluster (A group of connected and interdependent servers used for reliability and availability of an environment) |

HA | High Availability |

AG | Availability Groups |

BAG | Basic Availability Groups |

HADR | High Availability and Disaster Recovery |

FCI | Failover Cluster Instances |

BADR | Basic Availability and Disaster Recovery |

AD | Active Directory |

DS | Domain Services |

OU | Organisational Unit |

CAU | Cluster-Aware Updating |

Explanation

In this walkthrough, we will be setting up WSFC in Windows Server.

A brief explanation of what WSFC is and why we use it:

This is a group of Windows servers that work together to ensure high availability of applications and services.

Any WSFC (albeit for FCI or AG) comprises the following components:

Component | Definition |

Node | Any server that participates (preferable to have an odd number of nodes, as one will perform the role of tiebreaker). |

Cluster Resource | A physical or logical entity that can be owned by a node, brought online and taken offline, moved between nodes, and managed as a cluster object. A cluster resource can be owned by only a single node at any point in time. |

Role | A collection of cluster resources managed as a single cluster object to provide specific functionality |

Network name resource | A logical server name that is managed as a cluster resource. A network name resource must be used with an IP address resource. These entries may require objects in Active Directory Domain Services and/or DNS. |

Resource dependency | A resource on which another resource depends. If resource A depends on resource B, then B is a dependency of A. Resource A will not be able to start without resource B. |

Preferred owner | A node on which a resource group prefers to run. Each resource group is associated with a list of preferred owners sorted in order of preference. During automatic failover, the resource group is moved to the next preferred node in the preferred owner list. |

Possible owner | A secondary node on which a resource can run. Each resource group is associated with a list of possible owners. Roles can fail over only to nodes that are listed as possible owners. |

Quorum mode | The quorum configuration in a failover cluster that determines the number of node failures that the cluster can sustain. |

Force quorum | The process to start the cluster even though only a minority of the elements that are required for quorum are in communication |

It provides infrastructure features that support the high-availability and disaster recovery scenarios of hosted server applications.

The nodes in a WSFC work together to collectively provide these types of capabilities:

- Distributed metadata and notifications

- Resource management

- Health monitoring

- Failover coordination

Prerequisites for setting up WSFC

- Make sure that all servers that you want to add as cluster nodes are running the same version of Windows Server.

- Review the hardware requirements to make sure that your configuration is supported.

- Make sure that all servers that you want to add as cluster nodes are joined to the same Active Directory domain.

- Optionally, create an organizational unit (OU) and move the computer accounts for the servers that you want to add as cluster nodes into the OU. As a best practice, we recommend that you place failover clusters in their own OU in AD DS. This can help you better control which Group Policy settings or security template settings affect the cluster nodes. By isolating clusters in their own OU, it also helps prevent against accidental deletion of cluster computer objects.

Additionally, verify the following account requirements:

- Make sure that the account you want to use to create the cluster is a domain user who has administrator rights on all servers that you want to add as cluster nodes.

-

Make sure that either of the following is true:

- The user who creates the cluster has the Create Computer objects permission to the OU or the container where the servers that will form the cluster reside.

- If the user does not have the Create Computer objects permission, ask a domain administrator to prestage a cluster computer object for the cluster.

Now we need to do the actual Clustering work

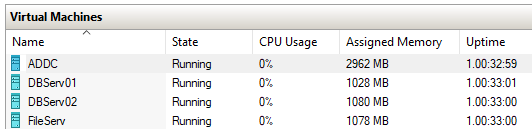

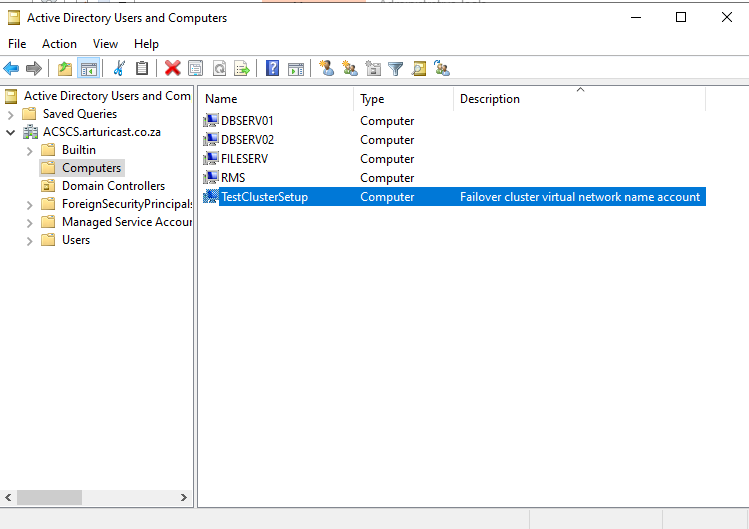

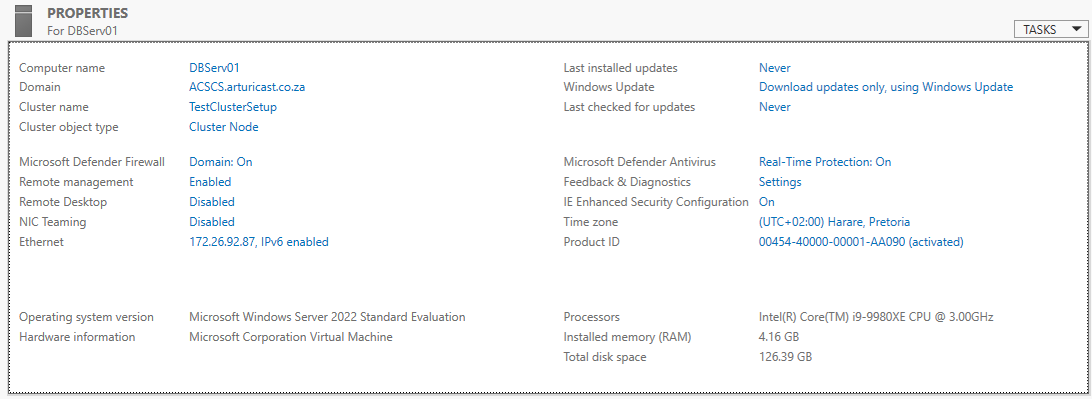

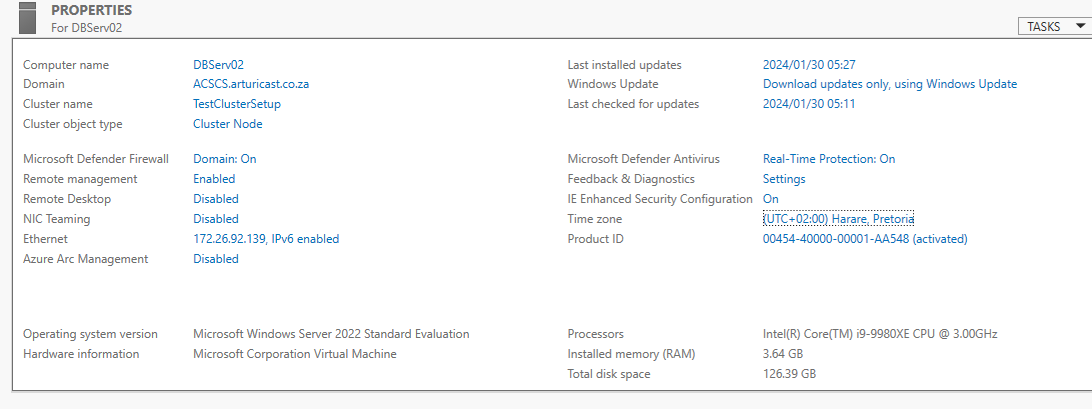

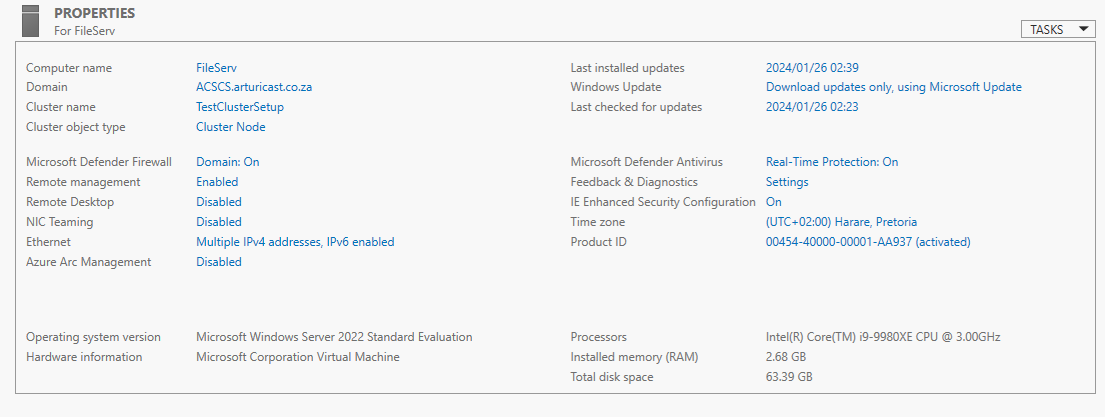

To showcase this, I have the following setup in my environment:

-

Host: Windows Server 2022 Standard with Hyper-V

-

Virtuals:

- Active Directory Services. Domain Controller and DNS Services (In the real world, this would be separate servers.)

- DB Server 1

- DB Server 2

- File Server (this will be the tiebreaker instance)

-

Virtuals:

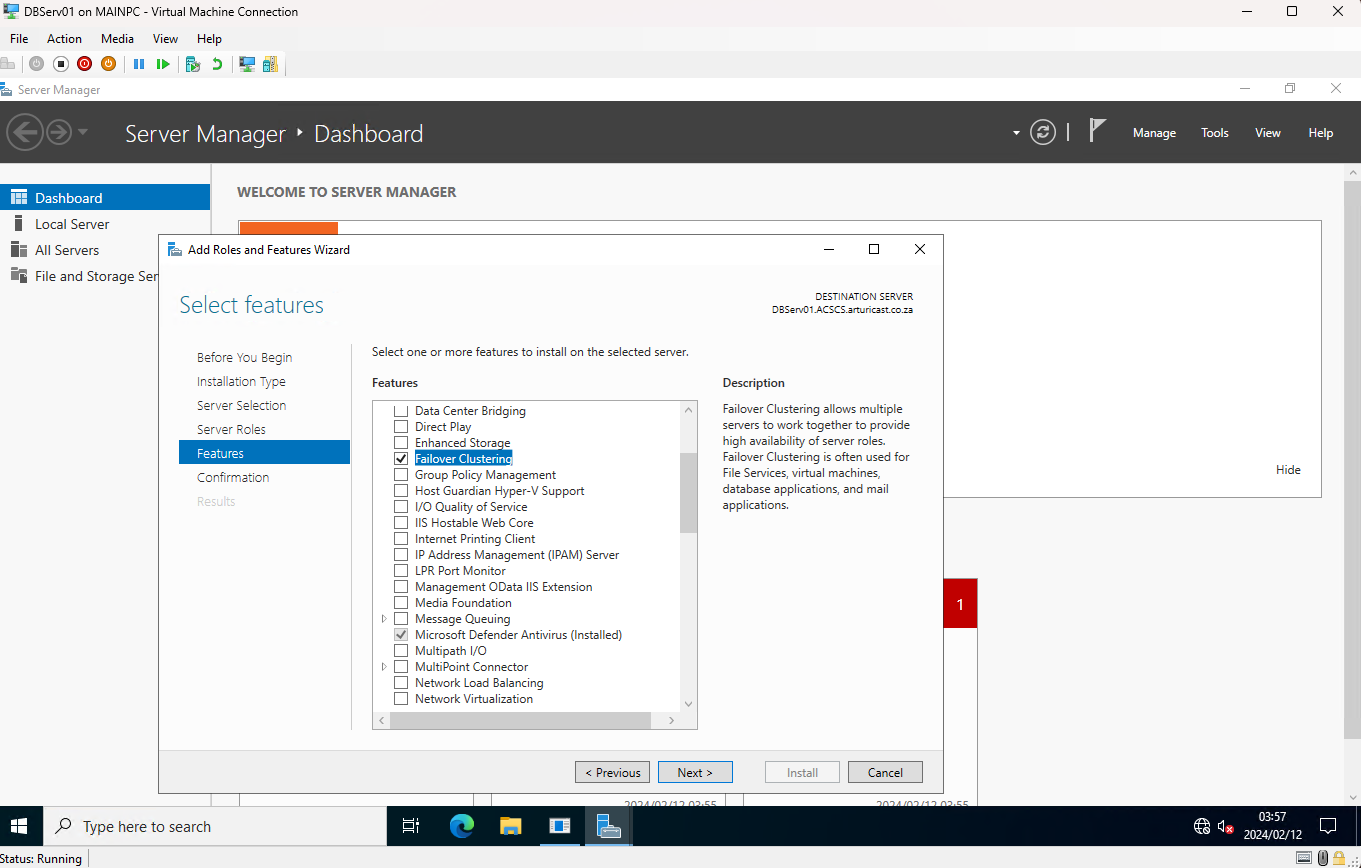

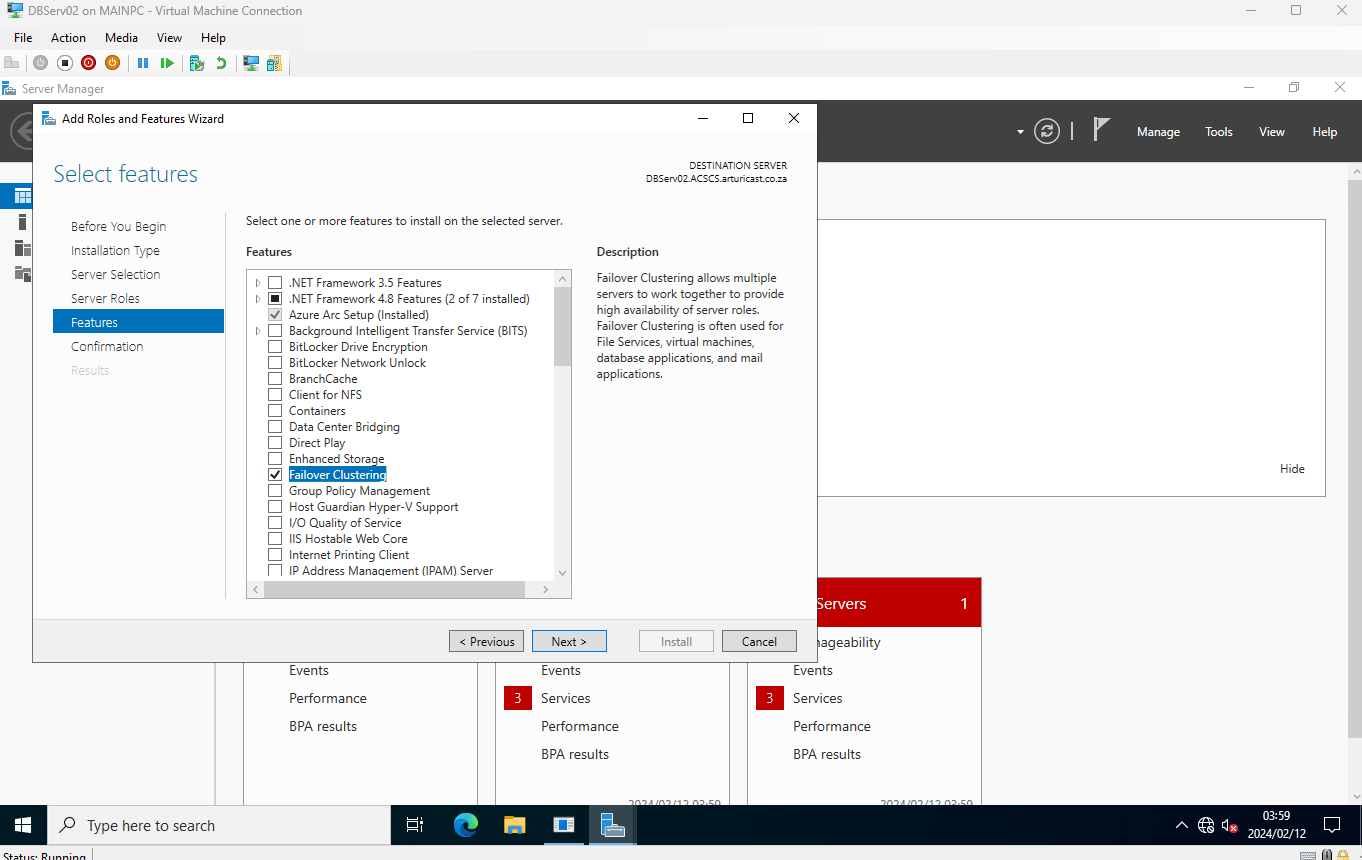

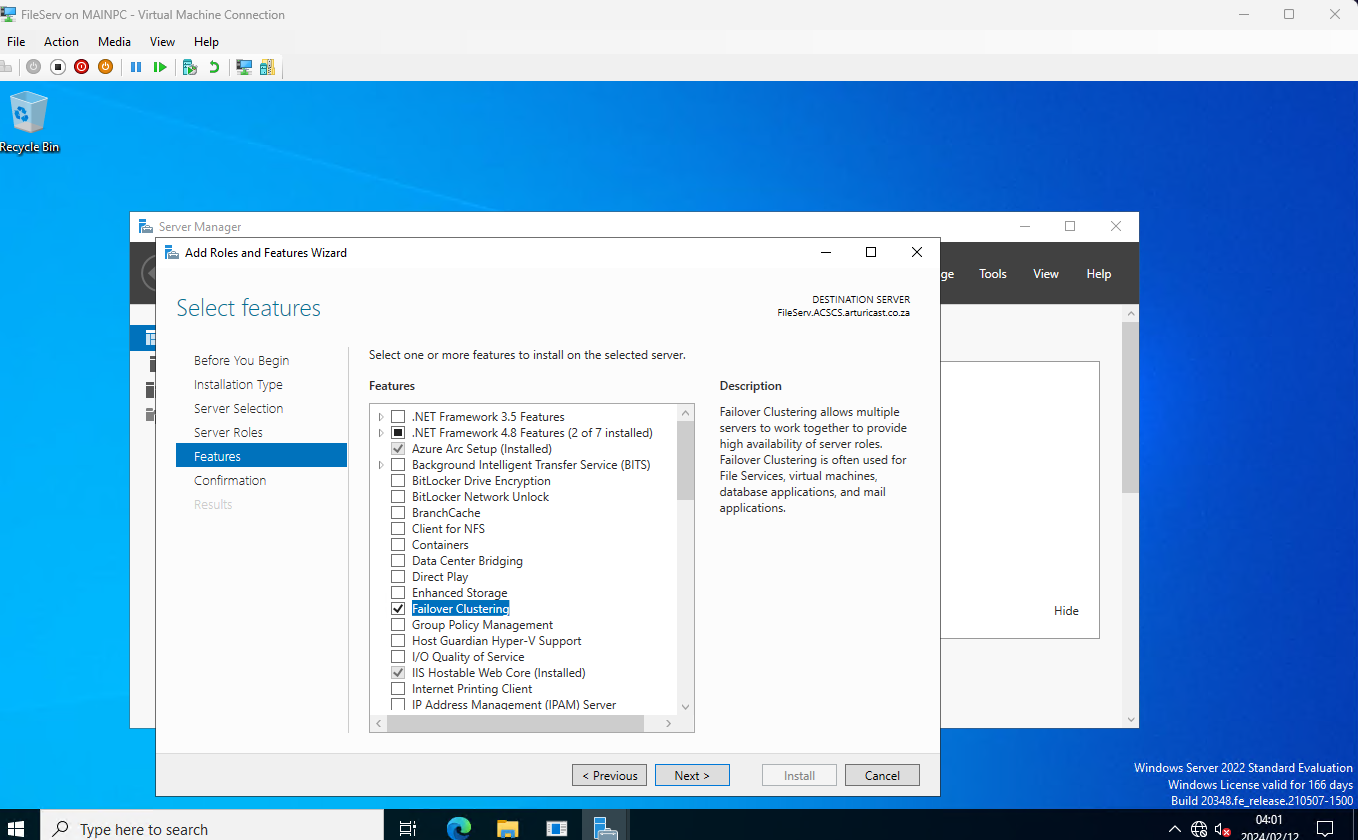

Step 1: Install Failover Cluster feature on all servers that will form part of the cluster

Step 2: Validate the configuration

Before you create the failover cluster, we strongly recommend that you validate the configuration to make sure that the hardware and hardware settings are compatible with failover clustering. Microsoft supports a cluster solution only if the complete configuration passes all validation tests and if all hardware is certified for the version of Windows Server that the cluster nodes are running.

The above demo environments do meet this, so we continue.

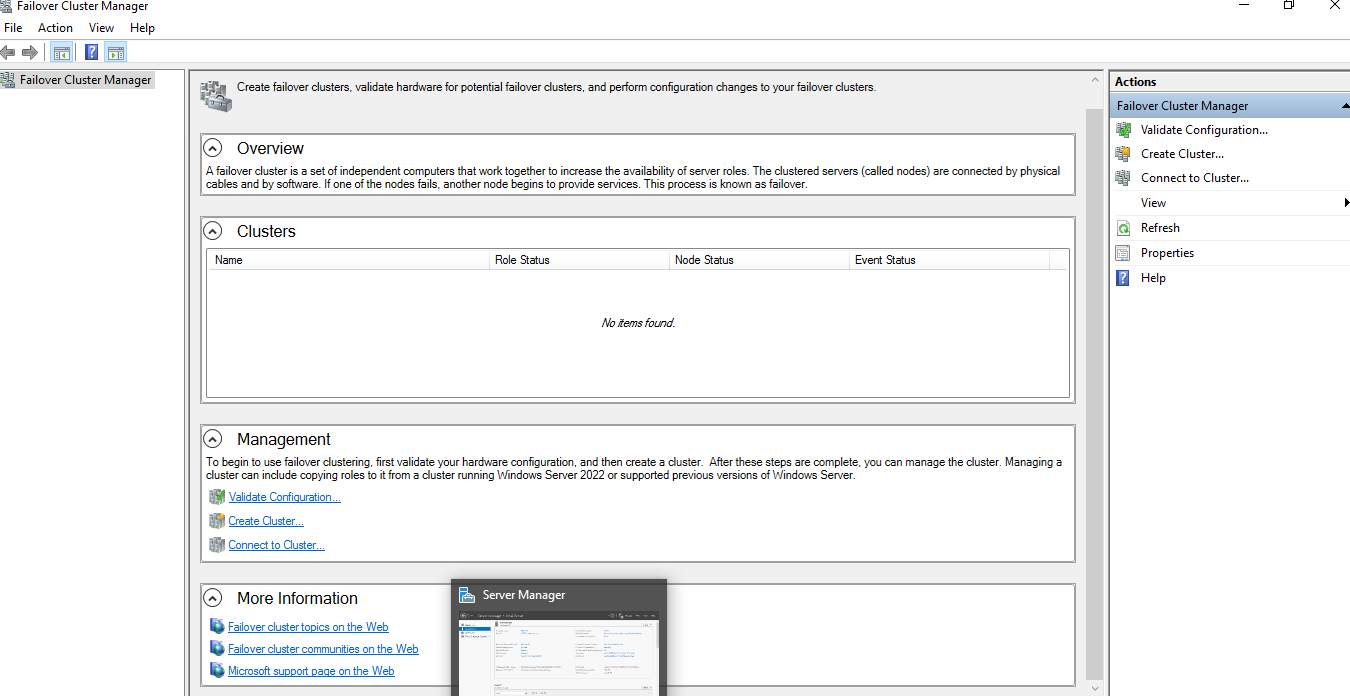

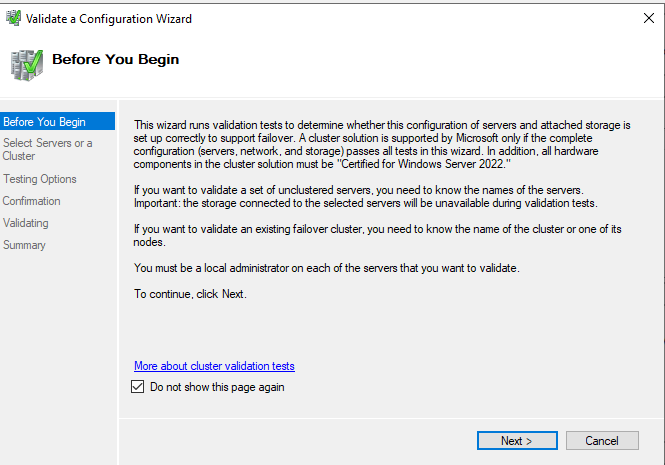

Step 3: Run cluster validation tests

- On a computer that has the Failover Cluster Management Tools installed from the Remote Server Administration Tools or on a server where you installed the Failover Clustering feature, start Failover Cluster Manager. To do this on a server, start Server Manager and then on the Tools menu, select Failover Cluster Manager.

-

- In the Failover Cluster Manager pane under Management, select Validate Configuration.

-

- On the Before You Begin page, select Next.

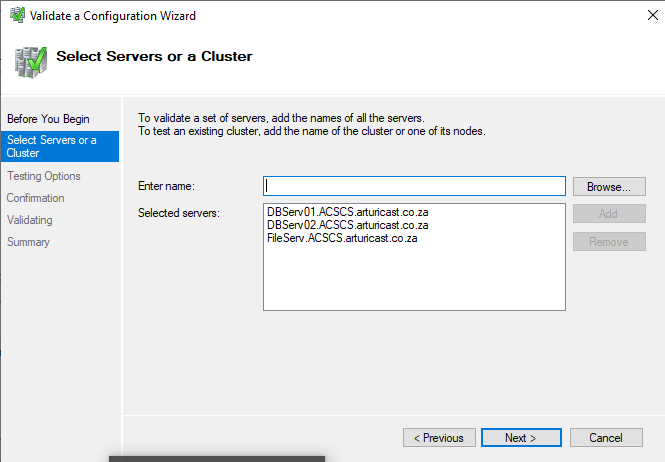

- On the Select Servers or a Cluster page in the Enter name box, enter the NetBIOS name or the fully qualified domain name of a server that you plan to add as a failover cluster node and then select Add. Repeat this step for each server that you want to add. To add multiple servers at the same time, separate the names by a comma or by a semicolon. For example, enter the names in the format server1.contoso.com, server2.contoso.com. When you are finished, select Next

- On the Testing Options page, select Run all tests (recommended) and then select Next.

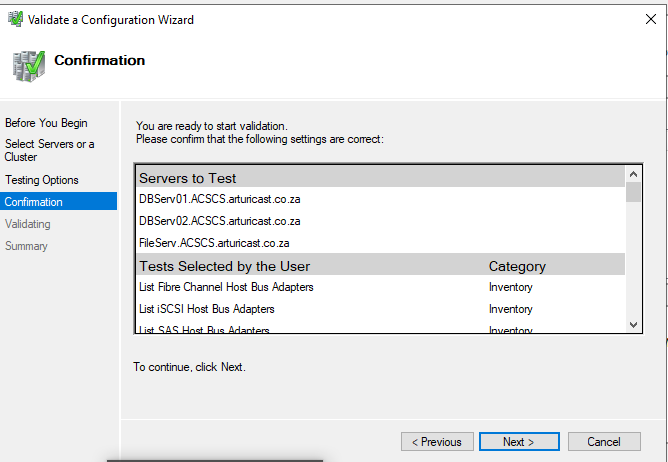

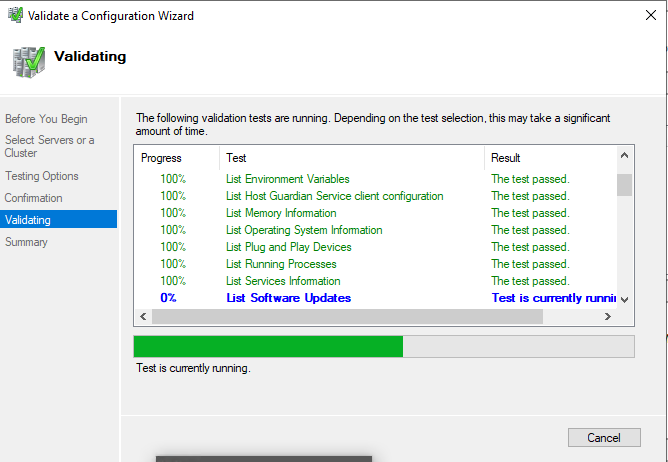

- On the Confirmation page, select Next. The Validating page displays the status of the running tests.

-

-

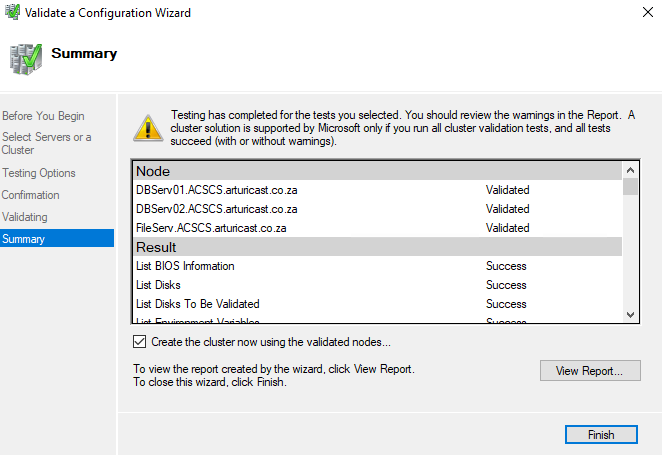

On the Summary page, do either of the following:

- If the results indicate that the tests completed successfully and the configuration is suited for clustering and you want to create the cluster immediately, make sure that the Create the cluster now using the validated nodes check box is selected and then select Finish. Then, continue to step 4 of the Create the failover cluster procedure.

-

On the Summary page, do either of the following:

-

-

-

- If the results indicate that there were warnings or failures, select View Report to view the details and determine which issues must be corrected. Realize that a warning for a particular validation test indicates that this aspect of the failover cluster can be supported but might not meet the recommended best practices.

-

-

Step 4: Create the failover cluster

To complete this step, make sure that the user account that you log on as meets the requirements that are outlined in the Verify the prerequisites section of this topic.

- Start Server Manager.

- On the Tools menu, select Failover Cluster Manager.

- In the Failover Cluster Manager pane, under Management, select Create Cluster.

The Create Cluster Wizard opens.

- On the Before You Begin page, select Next.

- If the Select Servers page appears, in the Enter name box enter the NetBIOS name or the fully qualified domain name of a server that you plan to add as a failover cluster node and then select Add. Repeat this step for each server that you want to add. To add multiple servers at the same time, separate the names by a comma or a semicolon. For example, enter the names in the format server1.contoso.com; server2.contoso.com. When you are finished, select Next.

Note

If you chose to create the cluster immediately after running validation in the configuration validating procedure, you will not see the Select Servers page. The nodes that were validated are automatically added to the Create Cluster Wizard so that you do not have to enter them again.

- If you skipped validation earlier, the Validation Warning page appears. We strongly recommend that you run cluster validation. Only clusters that pass all validation tests are supported by Microsoft. To run the validation tests, select Yes, and then select Next. Complete the Validate a Configuration Wizard as described in Validate the configuration.

-

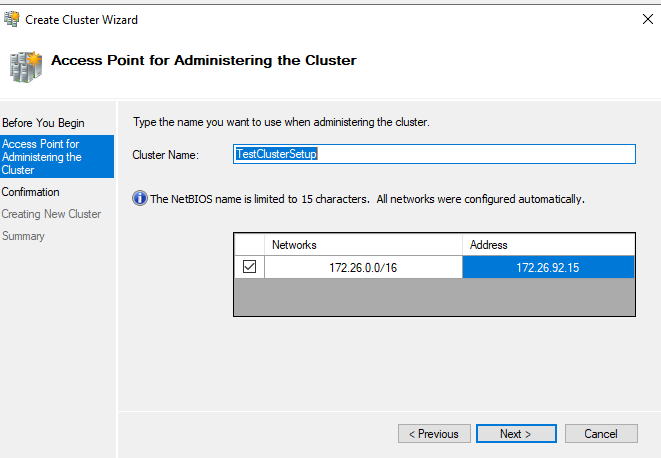

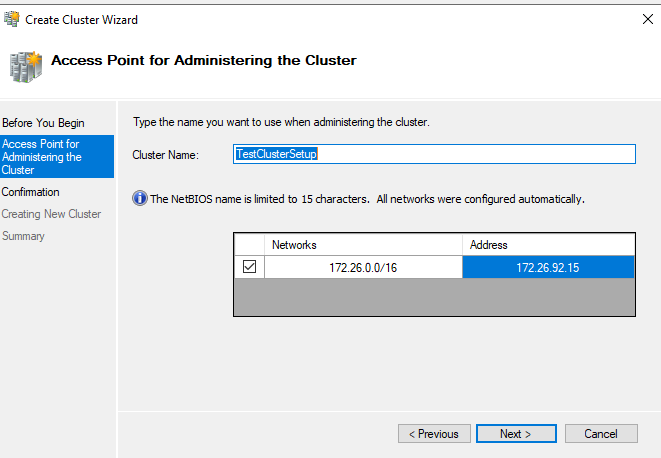

On the Access Point for Administering the Cluster page, do the following:

-

In the Cluster Name box, enter the name that you want to use to administer the cluster. Before you do, review the following information:

- During cluster creation, this name is registered as the cluster computer object (also known as the cluster name object or CNO) in AD DS. If you specify a NetBIOS name for the cluster, the CNO is created in the same location where the computer objects for the cluster nodes reside. This can be either the default Computers container or an OU.

- To specify a different location for the CNO, you can enter the distinguished name of an OU in the Cluster Name box. For example: CN=ClusterName, OU=Clusters, DC=Contoso, DC=com.

- If a domain administrator has prestaged the CNO in a different OU than where the cluster nodes reside, specify the distinguished name that the domain administrator provides.

- If the server does not have a network adapter that is configured to use DHCP, you must configure one or more static IP addresses for the failover cluster. Select the check box next to each network that you want to use for cluster management. Select the Address field next to a selected network and then enter the IP address that you want to assign to the cluster. This IP address (or addresses) will be associated with the cluster name in Domain Name System (DNS).

-

In the Cluster Name box, enter the name that you want to use to administer the cluster. Before you do, review the following information:

- When you are finished, select Next.

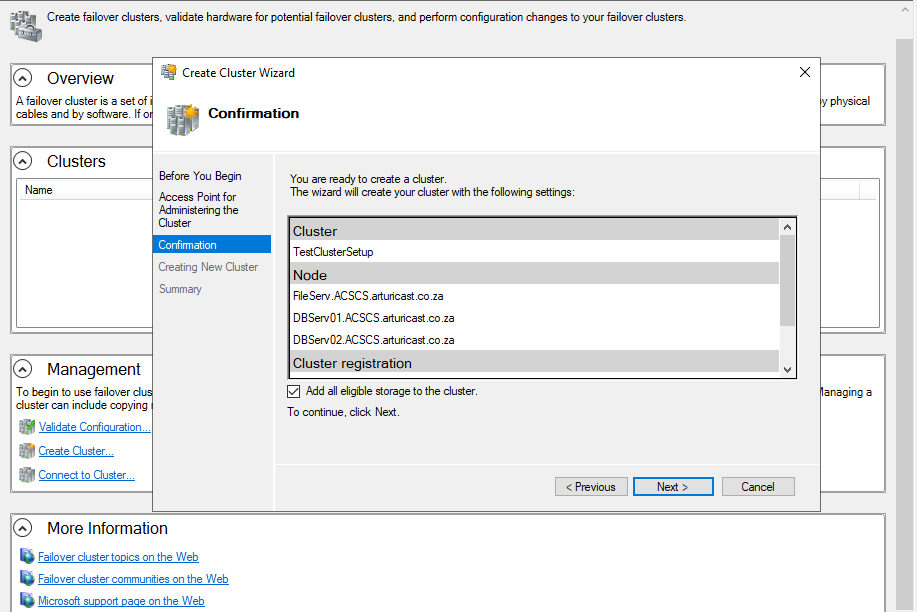

- On the Confirmation page, review the settings. By default, the Add all eligible storage to the cluster check box is selected. Clear this check box if you want to do either of the following:

- You want to configure storage later.

- You plan to create clustered storage spaces through Failover Cluster Manager or through the Failover Clustering Windows PowerShell cmdlets and have not yet created storage spaces in File and Storage Services. For more information, see Deploy Clustered Storage Spaces.

- Select Next to create the failover cluster.

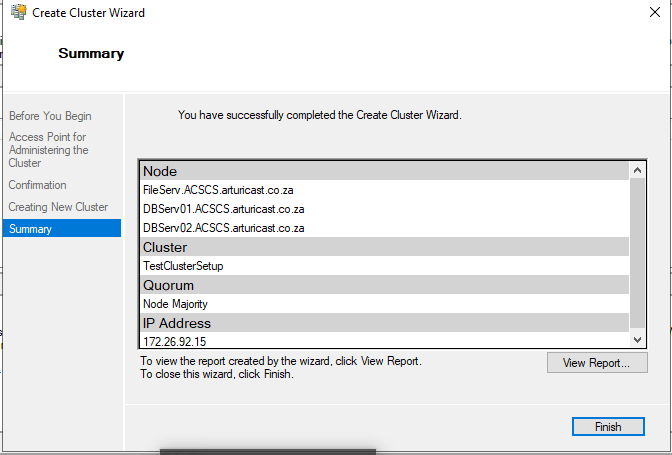

- On the Summary page, confirm that the failover cluster was successfully created. If there were any warnings or errors, view the summary output or select View Report to view the full report. Select Finish.

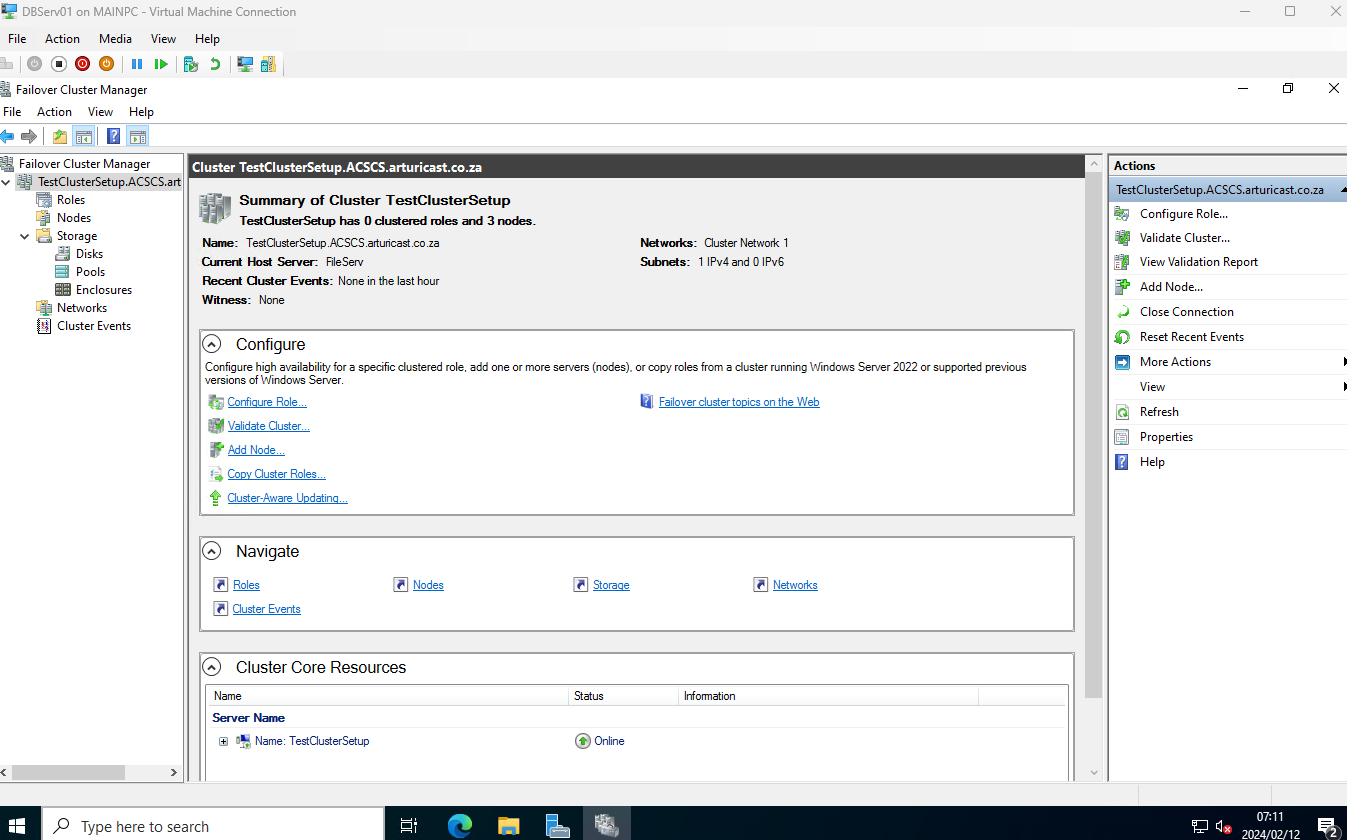

- To confirm that the cluster was created, verify that the cluster name is listed under Failover Cluster Manager in the navigation tree. You can expand the cluster name and then select items under Nodes, Storage or Networks to view the associated resources.

Realize that it may take some time for the cluster name to successfully replicate in DNS. After successful DNS registration and replication, if you select All Servers in Server Manager, the cluster name should be listed as a server with a Manageability status of Online.

After the cluster is created, you can do things such as verify cluster quorum configuration, and optionally, create Cluster Shared Volumes (CSV)

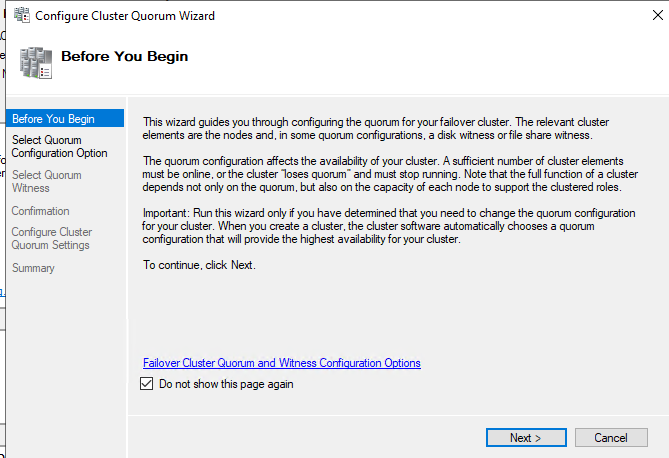

Step 5: Create Witness

What is needed next is to create a witness.

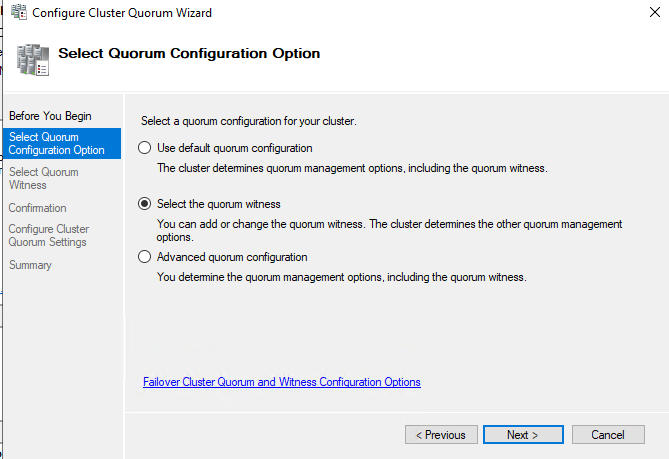

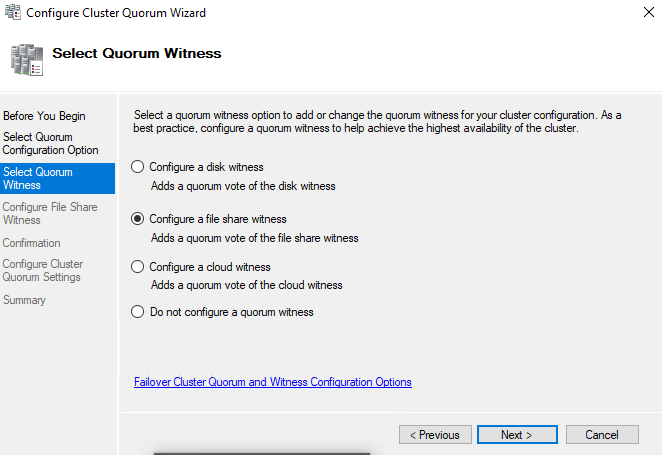

- In the Failover Cluster Manager, locate the new cluster resource, right click, More Actions, Configure Cluster Quorum Settings.

- Choose “Select the quorum witness”

- Here are various options. Choose what is right for your setup. In my lab I chose Configure file share witness

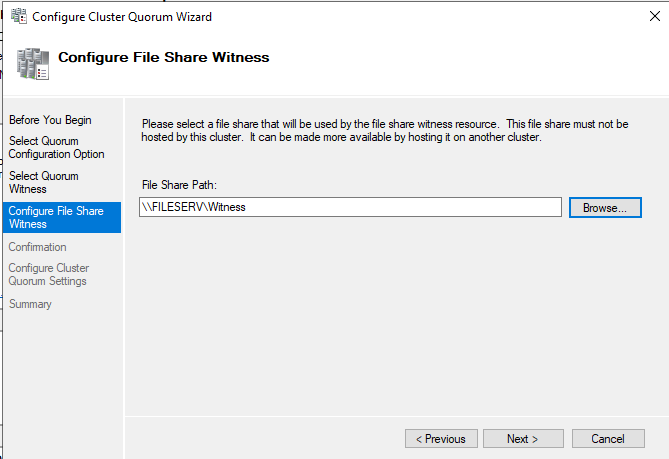

- Configure share witness

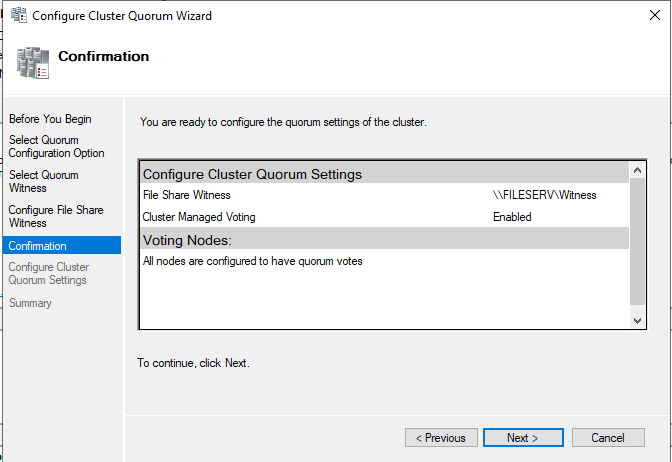

- Next the confirmation screen

- If all in order, press next

If all works according to plan, the cluster quorum witness will be created and added to the list of objects.

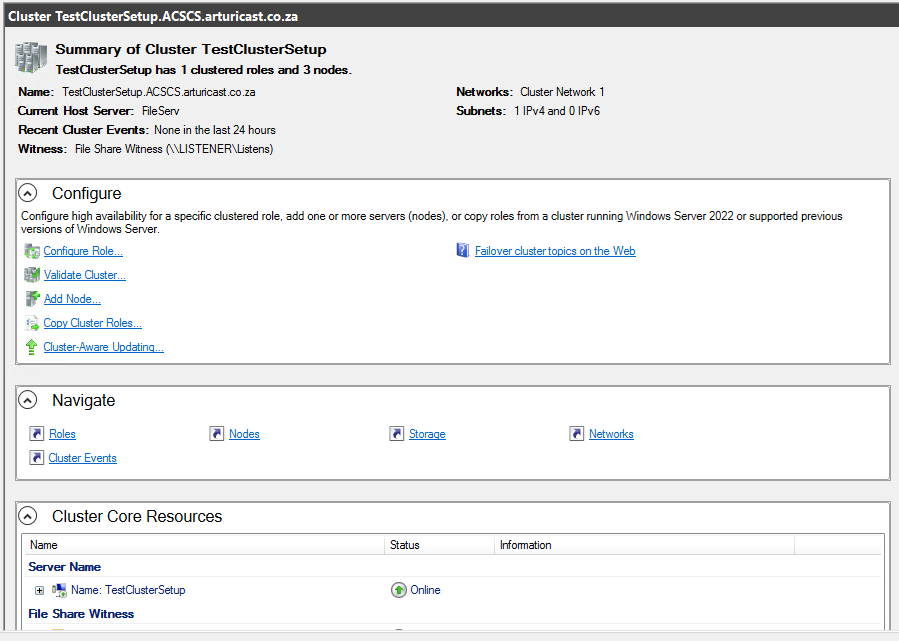

Final Outcomes

The local server properties now reflect:

Now we have a fully witnessed WSFC set up, any additional setup (e.g. FCI, HADR, BADR) is much simpler.

One last thing – Setting up Cluster-Aware Updating

Now that WSFC has been set up, we need to carefully consider how to apply updates.

Do not just update haphazardly. Care needs to be exercised. To this end, once the Clustering Management tool has been installed, Cluster-Aware Updating should be applied.

Feature description

Cluster-Aware Updating is an automated feature that enables you to update servers in a failover cluster with little or no loss in availability during the update process. During an Updating Run, Cluster-Aware Updating transparently performs the following tasks:

- Puts each node of the cluster into node maintenance mode.

- Moves the clustered roles off the node.

- Installs the updates and any dependent updates.

- Performs a restart if necessary.

- Brings the node out of maintenance mode.

- Restores the clustered roles on the node.

- Moves to update the next node.

For many clustered roles in the cluster, the automatic update process triggers a planned failover. This can cause a transient service interruption for connected clients. However, in the case of continuously available workloads, such as Hyper-V with live migration or file server with SMB Transparent Failover, Cluster-Aware Updating can coordinate cluster updates with no impact to the service availability.

Practical applications

- CAU reduces service outages in clustered services, reduces the need for manual updating workarounds, and makes the end-to-end cluster updating process more reliable for the administrator. When the CAU feature is used in conjunction with continuously available cluster workloads, such as continuously available file servers (file server workload with SMB Transparent Failover) or Hyper-V, the cluster updates can be performed with zero impact to service availability for clients.

- CAU facilitates the adoption of consistent IT processes across the enterprise. Updating Run Profiles can be created for different classes of failover clusters and then managed centrally on a file share to ensure that CAU deployments throughout the IT organization apply updates consistently, even if the clusters are managed by different lines-of-business or administrators.

- CAU can schedule Updating Runs on regular daily, weekly, or monthly intervals to help coordinate cluster updates with other IT management processes.

- CAU provides an extensible architecture to update the cluster software inventory in a cluster-aware fashion. This can be used by publishers to coordinate the installation of software updates that are not published to Windows Update or Microsoft Update or that are not available from Microsoft, for example, updates for non-Microsoft device drivers.

- CAU self-updating mode enables a “cluster in a box” appliance (a set of clustered physical machines, typically packaged in one chassis) to update itself. Typically, such appliances are deployed in branch offices with minimal local IT support to manage the clusters. Self-updating mode offers great value in these deployment scenarios.

Important functionality

The following is a description of important Cluster-Aware Updating functionality:

- A user interface (UI) – the Cluster Aware Updating window – and a set of cmdlets that you can use to preview, apply, monitor, and report on the updates

- An end-to-end automation of the cluster-updating operation (an Updating Run), orchestrated by one or more Update Coordinator computers

- A default plug-in that integrates with the existing Windows Update Agent (WUA) and Windows Server Update Services (WSUS) infrastructure in Windows Server to apply important Microsoft updates

- A second plug-in that can be used to apply Microsoft hotfixes, and that can be customized to apply non-Microsoft updates

- Updating Run Profiles that you configure with settings for Updating Run options, such as the maximum number of times that the update will be retried per node. Updating Run Profiles enable you to rapidly reuse the same settings across Updating Runs and easily share the update settings with other failover clusters.

- An extensible architecture that supports new plug-in development to coordinate other node-updating tools across the cluster, such as custom software installers, BIOS updating tools, and network adapter or host bus adapter (HBA) updating tools.

Cluster-Aware Updating can coordinate the complete cluster updating operation in two modes:

- Self-updating mode For this mode, the CAU clustered role is configured as a workload on the failover cluster that is to be updated, and an associated update schedule is defined. The cluster updates itself at scheduled times by using a default or custom Updating Run profile. During the Updating Run, the CAU Update Coordinator process starts on the node that currently owns the CAU clustered role, and the process sequentially performs updates on each cluster node. To update the current cluster node, the CAU clustered role fails over to another cluster node, and a new Update Coordinator process on that node assumes control of the Updating Run. In self-updating mode, CAU can update the failover cluster by using a fully automated, end-to-end updating process. An administrator can also trigger updates on-demand in this mode, or simply use the remote-updating approach if desired. In self-updating mode, an administrator can get summary information about an Updating Run in progress by connecting to the cluster and running the Get-CauRun Windows PowerShell cmdlet.

- Remote-updating mode For this mode, a remote computer, which is called an Update Coordinator, is configured with the CAU tools. The Update Coordinator is not a member of the cluster that is updated during the Updating Run. From the remote computer, the administrator triggers an on-demand Updating Run by using a default or custom Updating Run profile. Remote-updating mode is useful for monitoring real-time progress during the Updating Run, and for clusters that are running on Server Core installations.

Configure the nodes for remote management

To use Cluster-Aware Updating, all nodes of the cluster must be configured for remote management. By default, the only task you must perform to configure the nodes for remote management is to Enable a firewall rule to allow automatic restarts.

The following table lists the complete remote management requirements, in case your environment diverges from the defaults.

These requirements are in addition to the installation requirements for the Install the Failover Clustering feature and the Failover Clustering Tools and the general clustering requirements that are described in previous sections in this topic.

Requirement | Default state | Self-updating mode | Remote-updating mode |

Disabled | Required on all cluster nodes if a firewall is in use | Required on all cluster nodes if a firewall is in use | |

Enabled | Required on all cluster nodes | Required on all cluster nodes | |

Enable Windows PowerShell 3.0 or 4.0 and Windows PowerShell remoting | Enabled | Required on all cluster nodes | Required on all cluster nodes to run the following:

– The Save-CauDebugTrace cmdlet

|

Enabled | Required on all cluster nodes | Required on all cluster nodes to run the following:

– The Save-CauDebugTrace cmdlet

|

Enable a firewall rule to allow automatic restarts

To allow automatic restarts after updates are applied (if the installation of an update requires a restart), if Windows Firewall or a non-Microsoft firewall is in use on the cluster nodes, a firewall rule must be enabled on each node that allows the following traffic:

Protocol: TCP

Direction: inbound

Program: wininit.exe

Ports: RPC Dynamic Ports

Profile: Domain

If Windows Firewall is used on the cluster nodes, you can do this by enabling the Remote Shutdown Windows Firewall rule group on each cluster node. When you use the Cluster-Aware Updating window to apply updates and to configure self-updating options, the Remote Shutdown Windows Firewall rule group is automatically enabled on each cluster node.

Note

The Remote Shutdown Windows Firewall rule group cannot be enabled when it will conflict with Group Policy settings that are configured for Windows Firewall.

The Remote Shutdown firewall rule group is also enabled by specifying the –EnableFirewallRules parameter when running the following CAU cmdlets: Add-CauClusterRole, Invoke-CauRun, and SetCauClusterRole.

The following PowerShell example shows an additional method to enable automatic restarts on a cluster node.

Set-NetFirewallRule -Group “@firewallapi.dll,-36751” -Profile Domain -Enabled true

Enable Windows Management Instrumentation (WMI)

All cluster nodes must be configured for remote management using Windows Management Instrumentation (WMI). This is enabled by default.

To manually enable remote management, do the following:

In the Services console, start the Windows Remote Management service and set the startup type to Automatic.

Run the Set-WSManQuickConfig cmdlet, or run the following command from an elevated command prompt:

winrm quickconfig -q

To support WMI remoting, if Windows Firewall is in use on the cluster nodes, the inbound firewall rule for Windows Remote Management (HTTP-In) must be enabled on each node. By default, this rule is enabled.

Enable Windows PowerShell and Windows PowerShell remoting

To enable self-updating mode and certain CAU features in remote-updating mode, PowerShell must be installed and enabled to run remote commands on all cluster nodes. By default, PowerShell is installed and enabled for remoting.

To enable PowerShell remoting, use one of the following methods:

Run the Enable-PSRemoting cmdlet.

Configure a domain-level Group Policy setting for Windows Remote Management (WinRM).

For more information about enabling PowerShell remoting, see About Remote Requirements.

Install .NET Framework 4.6 or 4.5

To enable self-updating mode and certain CAU features in remote-updating mode,.NET Framework 4.6, or .NET Framework 4.5 (on Windows Server 2012 R2) must be installed on all cluster nodes. By default, NET Framework is installed.

To install .NET Framework 4.6 (or 4.5) using PowerShell if it’s not already installed, use the following command:

Install-WindowsFeature -Name NET-Framework-45-Core

Best practices recommendations for using Cluster-Aware Updating

Recommendations for applying Microsoft updates

We recommend that when you begin to use CAU to apply updates with the default Microsoft.WindowsUpdatePlugin plug-in on a cluster, you stop using other methods to install software updates from Microsoft on the cluster nodes.

Caution

Combining CAU with methods that update individual nodes automatically (on a fixed time schedule) can cause unpredictable results, including interruptions in service and unplanned downtime.

We recommend that you follow these guidelines:

For optimal results, we recommend that you disable settings on the cluster nodes for automatic updating, for example, through the Automatic Updates settings in Control Panel, or in settings that are configured using Group Policy.

Caution

Automatic installation of updates on the cluster nodes can interfere with installation of updates by CAU and can cause CAU failures.

If they are needed, the following Automatic Updates settings are compatible with CAU, because the administrator can control the timing of update installation:

- Settings to notify before downloading updates and to notify before installation

- Settings to automatically download updates and to notify before installation

However, if Automatic Updates is downloading updates at the same time as a CAU Updating Run, the Updating Run might take longer to complete.

Do not configure an update system such as Windows Server Update Services (WSUS) to apply updates automatically (on a fixed time schedule) to cluster nodes.

All cluster nodes should be uniformly configured to use the same update source, for example, a WSUS server, Windows Update, or Microsoft Update.

If you use a configuration management system to apply software updates to computers on the network, exclude cluster nodes from all required or automatic updates. Examples of configuration management systems include Microsoft Endpoint Configuration Manager and Microsoft System Center Virtual Machine Manager 2008.

If internal software distribution servers (for example, WSUS servers) are used to contain and deploy the updates, ensure that those servers correctly identify the approved updates for the cluster nodes.